I’m working on building a Google Data Studio (GDS) Connector; for those unfamiliar, Google Data Studio is a free BI (Business Intelligence) reporting platform, similar to Microsoft BI or Tableau. A connector, or “Community Connector” (a connector not built by Google) is an Apps Script powered bundle of code (Javascript) that enables Google Data Studio to connect to any third part service, by acting as the relay in-between.

As I’ve been working, I’ve been more than a little frustrated by the lack of in-depth documentation and troubleshooting guides available, so this post is both a way for me to fill in some of those gaps for others, as well as a way to vent my anger 🙂

Tip: Review the community connectors repo

If you get lost or stuck, and are wondering “how have others done this?”, you might find it helpful to check out the “community-connectors” repo on Github. These are connectors that various people have developed, that are open-sourced and varied in usage.

Tip: Testing new code without deploys

If you want to test how new code actually works with GDS, you might be tempted to either reconnect to Data Studio or create a brand new deployment. Both are actually unnecessary if you just want to test for just yourself and are not pushing to production. When you click “Deploy from manifest…” in the “Publish” menu dropdown, that alone will actually push your code to any connectors that were setup by clicking the special link under “Latest Version (Head)”.

So, for example, if I’ve just added a new “console.log()” line to my code and want to instantly see the new message appear in StackDriver, I would add the line of code, save, then go to “Menu -> Publish -> Deploy from manifest”, and then, in another tab, simply refresh a Google Data Studio report that uses the connector.

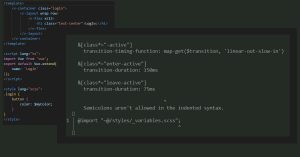

Tip: Use TypeScript

Due to the complexity of writing a GDS connector and the amount of different types involved, I recommend using TypeScript, especially if you are used to using statically typed languages. I started writing my connector in vanilla Javascript, realized how complex things were getting, and rewrote it quickly into TS. During the rewrite process, I caught several bugs in my code purely through looking at TypeScripts type-checking errors. That is not to say that TS will prevent you from writing broken code, but I find it helps to keep things orderly and avoid what I would call “silly” programming mistakes. Part of this is personal preference as well.

I would only advise this if you have at least a little familiarity with TypeScript, or are looking to learn and have the extra time for it.

For how to use TypeScript with GDS: I’ve already written a guide for using TypeScript with GAS projects, and it applies to Google Data Studio Connectors as well.

Tip: Use TypeScript definition files

Even if you are not using TypeScript, one of the awesome things about VSCode is that you can still get the intellisense / auto-suggest capability to take advantage of TypeScript definition files. Just make sure you have a package.json file, and install “@types/google-apps-script”. Once you do that, even if you are writing your connector in vanilla JS, you should be able to take advantage of the improved intellisense:

Tip: Additional resources:

There are a bunch of great resources out there for help on building a connector, beyond the official guides.

Here are some in particular that stood out to me:

- Looking at code in the “community-connectors” repo.

- “Building a custom Google Data Studio connector from A-Z” by Jan Bajena

- This massive compilation of GDS resources by @Helpfullee.

- The “DataVis DevTalk” series on Youtube by Google Cloud Platform (especially talks by @_mkazi_)

Gotcha: Unexpected data formatting rules

I’ll just go ahead and say it: the way Google Data Studio expects data to be formatted is… well… a little strange. The format of the data in a field that you return to GDS is usually referred to as a “semantic type”. The GDS docs have a nice table of the possible formats here.

Noticed anything odd about that table? I sure did! There are several random semantic types that, despite always holding an integer value with no zero-padding, such as “QUARTER” and “DAY_OF_WEEK”, expect a string.

Furthermore, there is no field to hold a full datetime/timestamp value! The date field with the highest precision is “YEAR_MONTH_DAY_HOUR”, and that only gets as precise as the hour. If you really wanted to pass a full timestamp to GDS, you could pass an epoch / unix timestamp in a NUMBER field, and then leave it up to the user to format it into any date format they would like with a “calculated field” and the “TODATE()” function.This solution also allows you to ignore timezone discrepancies, as the epoch does not need to take those into account (at least not for the purpose of passing it to GDS for their TODATE to handle).

EDIT: On 09/09/2019, they added support for “YEAR_MONTH_DAY_SECOND” (format: YYYYMMDDHHMMSS), which is a massive improvement considering that previously the highest precision date-time field was a specific hour (YEAR_MONTH_DAY_HOUR). Thanks GDS!

Gotcha: Debugging, Logging messages and errors

Debugging:

There isn’t really a way to use the built-in GAS debugger as the connector runs, such as when a user navigates a report that uses the connector within Google Data Studio, or runs through the configuration setup. However, you could create functions that “mock” those actions, and then debug them by selecting the function in the “Select function” dropdown, and hitting the “debug” button.

Logging:

Since there is no real way to “debug” a community connector as it runs, you will likely find yourself putting in lots of print statements when you are trying to track down an error. If you are not seeing your messages show up, check how you are logging them.

The old school way is to use “Logger.log(message)”, but that only works if your code is initiated directly within the Apps Script IDE. In general, you should avoid Logger, and instead use “console.log()”. Calls to console methods log to StackDriver, which you can find by going to “view -> StackDriver Logging” inside the Apps Script IDE.

Also, note that the syntax for “console.log()” in GAS is not exactly the same as in your browser. Here is the reference page.

Gotcha: Timezones

There are a few things to note about using Timezones with GDS. First, both the GDS platform and the Apps Script environment use UTC standard time (aka GMT) as the default. So you will need to take that into account when returning date/time fields.

Timezones are complicated (and annoying), so I won’t even attempt to try to pretend I can solve this for you, but I will give some possible approaches:

- Always return everything in UTC-0. This is probably the safest solution, but requires coding offsets for every piece of data you are relaying from another API to GDS.

- Worse yet, if the API does not have a specific endpoint to query what the users timezone is, you might have to “sniff” it based on date/time strings…

- Offer users a configuration setting where they can enter their timezone in hours offset from UTC, then inside your connector, add or subtract that number from every date field. This is the approach used by the openweathermap community connector.

- If the API you are requesting data from allows for you to specify a timezone, give it UTC-0 as the desired format, and then you won’t need to offset the data it returns before passing to GDS.

Gotcha: Promises, async/await

Here is the deal; yes, you can actually use promises and async/await inside Google Apps Script (and thus Google Data Studio connect0rs), but, you probably don’t want to.

The issue is that pretty much all required functions are synchronous in nature, and are expecting to be returned a non-promise value. For example, if you are wrapping API requests in promises, there is no way for you to return the value inside the promise to getData, and then return it to GDS. You can’t make getData async to use await inside it, so you might be tempted to write:

function getData(request){

let apiResponse;

myApiPromise.then((result)=>{

apiResponse = result;

});

return apiResponse;

}…but in this case, your promise would start to execute, and then while it is still executing, your return statement would hit the return line and instantly return “undefined” to GDS. This would actually trigger a “column number does not match request” error (see this gotcha item).

If you insist on using promises, there are just a few steps to take to get them working.

- Add the es6-promise polyfill to your project. The easiest way is to simply copy and paste from the minified source into an Apps Script code tab.

- Since es6-promise relies on setTimeout, which does not exist in the GAS environment, you need to manually polyfill that as well. Copy and paste this code into your project:

-

function setTimeout(cb, ms) { Utilities.sleep(ms); cb(); }

-

- Done! You should now be able to use polyfill and async/await just like normal! Just don’t use them in places where they are not expected.

Gotcha: The order of requested fields

FIXED! You can now ignore this section.

This issue has been addressed and actually fixed by GDS 🙂

You can find their PR mentioning a patch here.

I’ll leave the below section for historical reasons, or if this issue ever resurfaces.

Previously encountered issue:

When GDS requests a set of data, there are three places where field order has the potential to change; in the request.fields, in the order that you return schema in the getData() return object schema property, and the order you return columns in the return object rows->values array. The first one does not really matter, but what does matter is that your schema field order and rows column order match, or else it will throw an error, usually “Failed to fetch data from the underlying data set”.

Here is the “gotcha”; the way that Google recommends you build schema in response to a getData request is actually very likely to cause an issue with this. They recommend that you use getFields().forIds(), and pass in the array of field IDs that were requested by GDS. The catch? This is not documented, but I caught forIds() returning fields in an order that did not match the order of the array passed to it!

Here is an example. Take a look at this code:

function getData(request) {

// Raw request field ids - ['day','time','cost',...]

var requestedFieldIds = request.fields.map(function (field) { return field.name; });

// Should return special fields obj collection

var requestedFields = getFields().forIds(requestedFieldIds);

// Map back to an array of field ids - ['day','time','cost',...]

var requestedFieldsMappedBackToIds = requestedFields.asArray().map(function (field) { return field.getId(); });

myConsole.log({

"Raw Request Ids": requestedFieldIds,

"GDS getFields().forIds() mapped back to IDs": requestedFieldsMappedBackToIds

});

// ... rest of getData() left out

}One might assume that both arrays of field IDs should be identical in my console.log() result. After all, to generate the second array, the first ordered array was used as the input. But…

{

"Raw Request Ids": [

"billableMoneyTotal",

"entryDescription",

"time"

],

"GDS getFields().forIds() mapped back to IDs": [

"entryDescription",

"billableMoneyTotal",

"time"

]

}Argh! forIds() randomly swapped the order of two of the columns! Because I was using the variable requestedFieldIds to generate a response to getData() in later code, my connector was failing because my schema had the flipped order, but my rows did not. Definitely something to be aware of (and would be great if Google could document somewhere). So basically, the rule of thumb should be to not rely on the order of fields/columns requested in getData(request), but instead focus on your result.schema and rows.rows[{values[]}] columns matching.

Gotcha: Unhelpful errors

“The number of columns received in the data returned from the community connector does not match the number of columns requested by Data Studio”

Despite this error being one that you are most likely to encounter, there is very little detail about it on the internet, and virtually none from Google’s own documentation. This StackOverflow question is one of the few search results (although maybe now this page will come up!).

This error message is directly linked to whatever you are returning from “getData()”. As the error text would imply, Google is saying that the number of columns returned by your code in getData does not match the number of columns that it requested, which you are supposed to read by checking the contents of the “request”, the only argument to getData.

Let’s walk through an example scenario. If I use “console.log(request)” inside “getData(request)”, this is what the request object looks like in our example case:

{

"configParams": {

"workspaceId": "9234225"

},

"dateRange": {

"endDate": "2019-06-29",

"startDate": "2019-06-29"

},

"fields": [

{

"name": "day"

},

{

"name": "time"

}

]

}

The important thing to note in the above request from GDS is that there are exactly two fields requested, “day” and “time”. Now, in our return object, both returnObject.schema and returnObject.rows[x].values, there should be exactly two columns returned by our code. Here is an example that would fail with the “number of columns does not match” error for the above request:

function getData(request){

return {

"cachedData": false,

"schema": [

{

"dataType": "STRING",

"name": "day",

"label": "Date",

"semantics": {

"conceptType": "DIMENSION",

"semanticType": "YEAR_MONTH_DAY"

}

},

{

"dataType":"STRING",

"name":"entryDescription",

"label":"Entry Description / Title",

"semantics": {

"conceptType":"DIMENSION",

"semanticType":"TEXT"

}

},

{

"defaultAggregationType": "SUM",

"dataType": "STRING",

"name": "time",

"description": "Logged Time",

"label": "Time",

"semantics": {

"isReaggregatable": true,

"conceptType": "METRIC",

"semanticType": "DURATION"

}

}

],

"rows": [

{

"values": [

"20190629",

"Entry Alpha",

"1245"

]

},

{

"values": [

"20190629",

"Entry Bravo",

"1981"

]

},

{

"values": [

"20190629",

"Entry Charlie",

"1570"

]

}

]

}

}Did you spot the error? I returned an extra field, “entryDescription”, which was not requested! Here is a different returned object that should satisfy the request and make the error go away.

function getData(request){

return {

"cachedData": false,

"schema": [

{

"dataType": "STRING",

"name": "day",

"label": "Date",

"semantics": {

"conceptType": "DIMENSION",

"semanticType": "YEAR_MONTH_DAY"

}

},

{

"defaultAggregationType": "SUM",

"dataType": "STRING",

"name": "time",

"description": "Logged Time",

"label": "Time",

"semantics": {

"isReaggregatable": true,

"conceptType": "METRIC",

"semanticType": "DURATION"

}

}

],

"rows": [

{

"values": [

"20190629",

"1245"

]

},

{

"values": [

"20190629",

"1981"

]

},

{

"values": [

"20190629",

"1570"

]

}

]

}

}Now, obviously, you would not want to be blindly returning hardcoded objects like this; your getData function should inspect every request, check what fields are needed, and dynamically construct the proper return object based on those fields. As such, your getData function is likely to be the most complicated method in your project

If the above did not solve your problem:

Now, the reason why I put this error in the “Gotcha” section is that the above example is usually why this error appears, but it will actually appear if getData() returns ANYTHING other than what matches the request. What I mean by this, is that this code will trigger the error:

function getData(request){

return false;

}Although Google’s error about the column number not matching is technically correct in this case (0 columns !== number > 0 in request), it is not helpful. They really should have a separate error for when getData returns a completely malformed response, that has 0 columns, is not an object, is lacking the “rows” object property, etc. Something like “getData response does not match expected format”.

The above code is an extremely simplified example; I actually made this mistake myself, and here are a bunch of ways you can end up accidentally returning the wrong thing in getData and triggering this error:

- Using promises or other async code in getData and trying to return from inside them. GDS will not see the final result, even if your promise succeeds, and it will see null/undefined/void as the returned object

- Using a bunch of if/else blocks to return different objects based on the request, but forgetting to have a “default” or missing a scenario not covered by your if/else blocks

- Not returning due to an error case (maybe in a try/catch set of blocks), but not throwing an exception to replace the “column number” error.

- You should use “cc.newUserError()” to throw a user-facing error. See the Google Data Studio guide on handling exceptions for details.

Gotcha: Publishing and sharing your connector

So, your connector is now functional and you are ready to share it with others. Deploying to your own account has been as easy a few clicks, so publishing and sharing the connector with others should be relatively straightforward too, right? Not so fast…

“Open Source” Community Connector vs Partner Connector

The first thing to note is that GDS offers two very distinct paths forward for “publishing” your connector in a way that it will show up in the public connector gallery (here). A table comparing these two options can be found on the “Publishing Overview” connector guide page. However, I would like to add some additional commentary here that I feel obligated to share.

Appsscript.json sources array – requirement for both options

Regardless of which publishing option you pick, there is a requirement that is easy to miss at first glance. In your appsscript.json meta file, there is a property called “sources”, which should have an array of strings corresponding to various sources that your connector uses. The catch here is that you should actually be only using source strings that exist as part of a source enum, which is maintained in a separate repository, the “Data Registry Repository“. So if you are building a connector that uses a brand new source that no other connector uses, you will need to open a PR into that repo to add the new source, before you publish your connector. See this page for details.

The “Open Source” Community Connector Option is… unusual…

First, you can find the full list of requirements for publishing the connector as an open source community connector here. What I would like to add, is that it is a little odd how this process works.

Community Connectors are maintained in a single mono-repo, googledatastudio/community-connectors, which are apparently synced across automatically to the connector gallery. To add your connector, you would first make sure it meets all the requirements, then make a pull-request, like this one, to add your connectors code to the repo. It also looks like one of the requirements to get your PR approved is to have signed the Google CLA (Contributor License Agreement).

Once the PR is approved, and your connector is in their repo, Google will start handling pretty much all parts of your connector; they are responsible for deployments, users are instructed to open issues with the connector on their repo instead of yours, and the only way to update the code is to make a new PR against the repo and wait for Google to merge and then redeploy. Another strange thing is that this whole workflow seems to be currently handled by just one Google employee (“diminishedprime”), but it looks like he is doing an impressive job responding to all the PRs and even taking time to give pointers to devs who are missing things on their PRs. Still, it seems like Google can afford to lend some more employees to cover the GDS connector repo.

The main downside to this publishing route is that you are relinquishing a huge amount of control to Google; they control the deploys, code updates, or could remove your connector entirely if you fail to update it to address issues. It looks like this might not always be apparent to everyone, as Google has taken to warning devs of new PRs about the downside to publishing as a community connector:

This connector might be better served as a partner connector. Please see Publish A Community Connector for an outline of why you might pick open-source vs Partner.

We can certainly merge this is an an open-source connector, but keep in mind that our support for this is limited.

If you need to make a fix/update to the connector, you’ll have to make a PR to this repo and we can only review those as we have time. If (potentially) long delays are okay with you (and your customers), we can move ahead with the review, but our guidance is anything business critical should go through the partner-path, or share deployment links directly.

Partner Connector Option

The partner connection option is more straightforward than the Open Source Community Connector option. The page of requirements and steps to take can be found here.

What about private publishing?

If you have built a connector that you just want to share with a few other people (or maybe internally at the company you work for), and not publish to the connector gallery, you might be tempted to just copy and paste the install link from the “deploy from manifest” popup in Apps Script editor. However, if you do this after starting from the default Apps Script environment, and send it to another user, they will likely get this error when they try to open the link:

The linked connectorId was invalid. Check the connectorId and try again.

OK, so how do we share a connector privately with other users? You might try next having the other use try adding the connector by ID instead of hyperlink, by going to the “select connector” page and then hitting the “DEVELOPERS” button on the upper right and pasting your deployment ID. But, if they did that, they would likely get this message:

The connector manifest could not be retrieved or is invalid. Check the connector and try again.

Hmm. Luckily this Google community post points us in the right direction. For both these errors, the issue is the permissions setting on the actual Apps Script project code. In order for other uses to “see” our manifest file, and actually load the connector, the permissions for the connector project needs to be set to give those users “view” privileges, at a minimum. You can change sharing permissions by clicking the “share” button in the upper right of the Apps Script IDE, or going to “File -> Share”.

If you work at a company, and want to keep the connector internal, you could set the permission to share with “people at this organization”. Otherwise, you should set it to “Anyone who has the link can view”, or, individually add the email addresses of everyone you want to share the connector with, and give them view access. Note that giving users view access technically means that they can view the source code of the connector, but they would need to know the URL of the script, whereas you will be sharing a deployment ID or deployment URL with them.

Awesome to just have something, anything about community connectors anywhere… the debugging part is the killer for me it’s like working blind. That and the lack of working examples anywhere – in particular an example linking to google’s own bigquery. There are none! Anywhere.